Dynamic Kubelet Configuration

Table of Contents

Kubelet, at launch time, loads configuration files from pre-specified files. Changed configurations are not applied into the running Kubelet process during runtime, hence manual restarting Kubelet is required after modification.

Dynamic Kubelet configuration eliminates this burden, making Kubelet monitors its configuration changes and restarts when it is updated1. It uses Kubernetes a ConfigMap object.

Kubelet Flags for Dynamic Configuration #

Dynamic kubelet configuration is not enabled by default. To be specific, one of required configurations is missing; the following flags for Kubelet are required for dynamic configuration:

--feature-gates="DynamicKubeletConfig=true": enabled by default since Kubernetes 1.11 2, so nothing should be done manually.--dynamic-config-dir=<path>: this flag for Kubelet is required to enable dynamic kubelet configuration.

Why --dynamic-config-dir flag is required? #

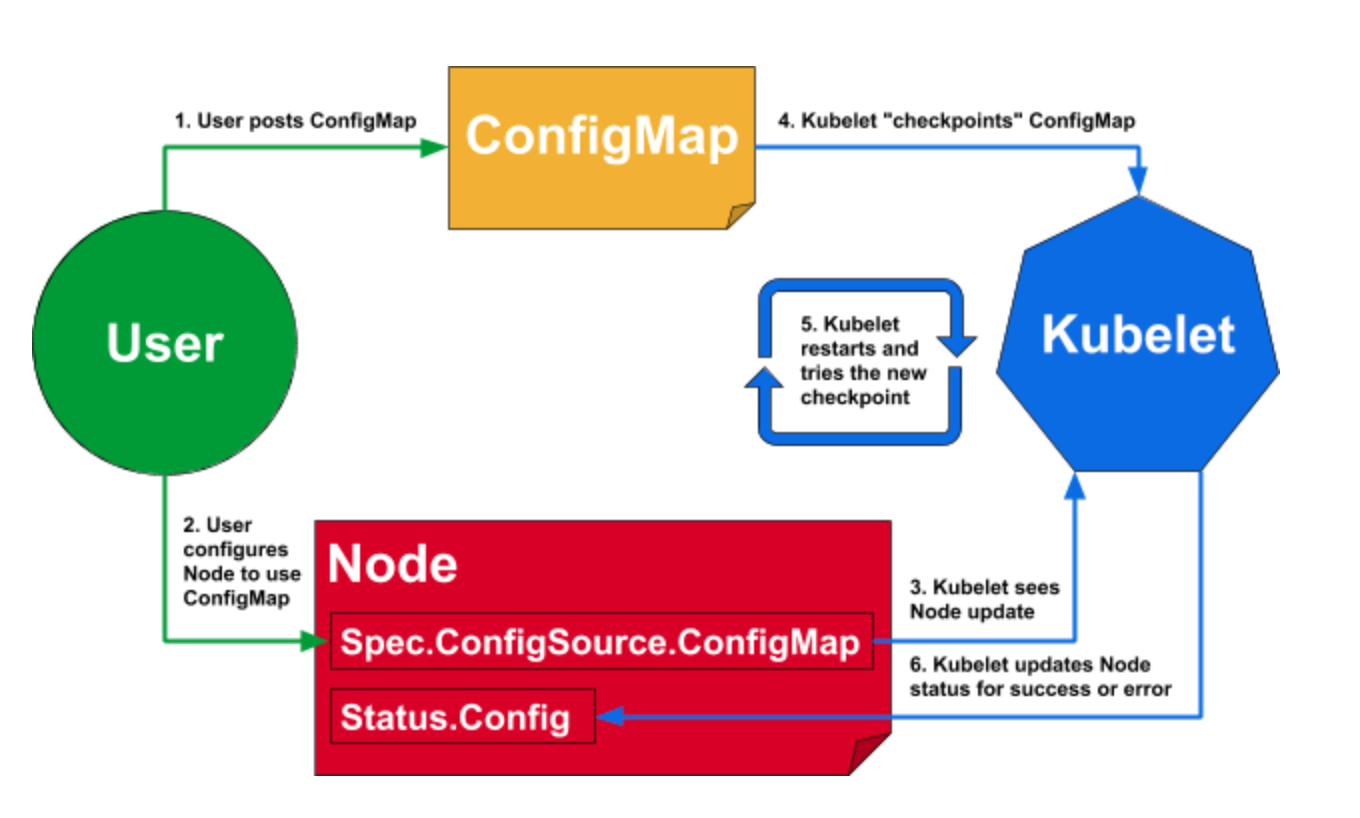

The figure above illustrates how Kubelet uses dynamic Kubelet reconfiguration.

At step 4~5, Kubelet “checkpoints” the modified configmap from the apiserver to its local storage, and loads the data after restarted.

Here, the checkpointed configmap should be stored at somewhere, and --dynamic-config-dir is the one.

When you specify --dynamic-config-dir, Kubelet automatically initializes itself with config checkpoint:

Aug 21 18:54:30 localhost.worker kubelet[107800]: I0821 18:54:30.679461 107800 watch.go:128] kubelet config controller: assigned ConfigMap was updated

Aug 21 18:54:34 localhost.worker kubelet[107800]: I0821 18:54:34.776572 107800 download.go:181] kubelet config controller: checking in-memory store for /api/v1/namespaces/kube-system/configmaps/k8s-worker16

Aug 21 18:54:34 localhost.worker kubelet[107800]: I0821 18:54:34.776605 107800 download.go:187] kubelet config controller: found /api/v1/namespaces/kube-system/configmaps/k8s-worker16 in in-memory store, UID: 5808539f-48e3-4e47-8ce6-c261c48fa1d9, ResourceVersion: 437429

Aug 21 18:54:34 localhost.worker kubelet[107800]: I0821 18:54:34.806121 107800 configsync.go:205] kubelet config controller: Kubelet restarting to use /api/v1/namespaces/kube-system/configmaps/k8s-worker16, UID: 5808539f-48e3-4e47-8ce6-c261c48fa1d9, ResourceVersion: 437429, KubeletConfigKey: kubelet

Aug 21 18:54:34 localhost.worker systemd[1]: kubelet.service: Succeeded.

Aug 21 18:54:45 localhost.worker systemd[1]: kubelet.service: Scheduled restart job, restart counter is at 19.

Aug 21 18:54:45 localhost.worker systemd[1]: Stopped kubelet: The Kubernetes Node Agent.

Aug 21 18:54:45 localhost.worker systemd[1]: Started kubelet: The Kubernetes Node Agent.

Aug 21 18:54:45 localhost.worker kubelet[108430]: I0821 18:54:45.115977 108430 controller.go:101] kubelet config controller: starting controller

Aug 21 18:54:45 localhost.worker kubelet[108430]: I0821 18:54:45.116038 108430 controller.go:267] kubelet config controller: ensuring filesystem is set up correctly

Aug 21 18:54:45 localhost.worker kubelet[108430]: I0821 18:54:45.116045 108430 fsstore.go:59] kubelet config controller: initializing config checkpoints directory "/var/lib/kubelet/dynamic-config/store"

...

Dynamic Configuration Setup #

Dynamic Kubelet Configuration uses ConfigMap objects. Therefore, a ConfigMap object for Kubelet configurations and related authorized access permission is required.

Creating a ConfigMap #

Dynamic Kubelet onfiguration cannot be used with the existing configurations given by flags and --config file.

In other words, even if a configuration is set by the flags or --config file, this configurations are not enabled and only Dynamic configurations are applied.

For this reason, 1 recommends to start from the current configuration.

$ kubectl proxy --port=8001 &

$ NODE_NAME="the-name-of-the-node-you-are-reconfiguring"; curl -sSL "http://localhost:8001/api/v1/nodes/${NODE_NAME}/proxy/configz" | jq '.kubeletconfig|.kind="KubeletConfiguration"|.apiVersion="kubelet.config.k8s.io/v1beta1"' > kubelet_configz_${NODE_NAME}

$ kubectl (-n kube-system) create configmap node-config --from-file=kubelet=kubelet_configz_${NODE_NAME}

It only works at control plane nodes, and currently I could not figure out which permission is required for this operation.

I found that the configmap does not need to be the namespace

kube-system. Also works if it is stored in the default namespace.

Adding more Configurations into the ConfigMap #

You should see your ConfigMap object with the following command:

$ kubectl get configmaps (-n NAMESPACE)

Now you can edit the ConfigMap object with edit subcommand:

$ kubectl edit configmaps node-config (-n NAMESPACE)

The format of the ConfigMap object looks like this:

apiVersion: v1

kind: ConfigMap

metadta: <not important>

name: node-config

namespace: default

data:

kubelet: | # <- Do not omit '|'.

{

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

<other Kubelet configurations in JSON format>

}

The ConfigMap object itself follows YAML format, but its content in data.kubelet foolows JSON format. Do not be confused.

Available Kubelet configuration key/value can be investigated in [here]

In my case, I want to try to restrict allocatable resources via dynamic Kubernetes configuration. I added several Kubelet flags in the ConfigMap object:

<omitted>

data:

kubelet: |

{

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

<other default configurations are omitted>,

"systemReserved": {

"cpu": "2",

"memory": "2Gi"

},

"kubeReserved": {

"cpu": "1",

"memory": "1Gi"

},

"systemReservedCgroup": "/system.slice", <-- not necessary

"kubeReservedCgroup": "/kubepods.slice", <-- not necessary

"cgroupPerQOS": true,

"enforceNodeAllocatable": ["pods"],

}

Note that

systemReservedCgroupandkubeReservedCgroupare not necessary, since I do not put"system-reserved"and"kube-reserved"inenforceNodeAllocatable.Note that

cgroupPerQOSshould be true for resource restriction on Kubernetes Pods, andenforceNodeAllocatablemust not contain neithersystem-reservednorekube-reservedstring elements, otherwise you will see the following wierd error in the Kubelet log. I am not sure whether it is a bug.Aug 26 16:55:47 localhost.worker kubelet[388430]: F0826 16:55:47.291827 388430 kubelet.go:1384] Failed to start ContainerManager Failed to enforce System Reserved Cgroup Limits on "/system.slice": ["system"] cgroup does not exist

You can see Kubelet method that creates /kubepods.slice cgroup in [here], or as follows.

// enforceNodeAllocatableCgroups enforce Node Allocatable Cgroup settings.

func (cm *containerManagerImpl) enforceNodeAllocatableCgroups() error {

nc := cm.NodeConfig.NodeAllocatableConfig

// We need to update limits on node allocatable cgroup no matter what because

// default cpu shares on cgroups are low and can cause cpu starvation.

nodeAllocatable := cm.internalCapacity

// Use Node Allocatable limits instead of capacity if the user requested enforcing node allocatable.

if cm.CgroupsPerQOS && nc.EnforceNodeAllocatable.Has(kubetypes.NodeAllocatableEnforcementKey) {

nodeAllocatable = cm.getNodeAllocatableInternalAbsolute()

}

klog.V(4).Infof("Attempting to enforce Node Allocatable with config: %+v", nc)

cgroupConfig := &CgroupConfig{

Name: cm.cgroupRoot,

ResourceParameters: getCgroupConfig(nodeAllocatable),

}

// Using ObjectReference for events as the node maybe not cached; refer to #42701 for detail.

nodeRef := &v1.ObjectReference{

Kind: "Node",

Name: cm.nodeInfo.Name,

UID: types.UID(cm.nodeInfo.Name),

Namespace: "",

}

// If Node Allocatable is enforced on a node that has not been drained or is updated on an existing node to a lower value,

// existing memory usage across pods might be higher than current Node Allocatable Memory Limits.

// Pod Evictions are expected to bring down memory usage to below Node Allocatable limits.

// Until evictions happen retry cgroup updates.

// Update limits on non root cgroup-root to be safe since the default limits for CPU can be too low.

// Check if cgroupRoot is set to a non-empty value (empty would be the root container)

if len(cm.cgroupRoot) > 0 {

go func() {

for {

err := cm.cgroupManager.Update(cgroupConfig)

if err == nil {

cm.recorder.Event(nodeRef, v1.EventTypeNormal, events.SuccessfulNodeAllocatableEnforcement, "Updated Node Allocatable limit across pods")

return

}

message := fmt.Sprintf("Failed to update Node Allocatable Limits %q: %v", cm.cgroupRoot, err)

cm.recorder.Event(nodeRef, v1.EventTypeWarning, events.FailedNodeAllocatableEnforcement, message)

time.Sleep(time.Minute)

}

}()

}

...

}

If cm.cgroupManager.Update(cgroupConfig) returns nil, the following event is logged in the target node:

$ kubectl describe nodes k8s-worker16

...

Normal KubeletConfigChanged 33m kubelet, k8s-worker16 Kubelet restarting to use /api/v1/namespaces/default/configmaps/node-config, UID: a8b4da9d-0b6e-478d-9e35-4a3696f5778c, ResourceVersion: 1464790, KubeletConfigKey: kubelet

Normal Starting 32m kubelet, k8s-worker16 Starting kubelet.

Normal NodeAllocatableEnforced 32m kubelet, k8s-worker16 Updated Node Allocatable limit across pods

Specifying Node to use the ConfigMap #

One more thing to do is to add spec to Kubernetes Node object to indicate which ConfigMap object should be used for dynamic Kubelet configuration for which node.

Oen an editor with the following command to specify it:

$ kubectl edit node ${NODE_NAME}

You have nothing in spec section if it is the first time to edit it.

Add configSource element as follows (note that it is YAML format):

spec:

configSource:

kubeletConfigKey: kubelet

name: node-config

namespace: default

each of which key means:

kubeletConfigKey: the key indatadictionary in the ConfigMap object. In our case, we specified it askubelet(kubelet: | { ... }).name: name of the ConfigMap object.namespace: namespace that the ConfigMap object is in.

Once you specify the spec, the Kubelet in the target node will automatically restart to apply dynamic configuration.

Verifying Dynamic Kubelet Configuration #

Check the result with $ kubectl get nodes ${NODE_NAME} -o yaml.

- First, check whether the spec is successfully applied:

managedFields:

- apiVersion: v1

- fieldsType: FieldsV1

fieldsV1:

f:spec:

f:configSource:

...

spec:

configSource:

kubeletConfigKey: kubelet

name: node-config

namespace: default

- Second, the Kubelet updates its status whether the configuration is successfully applied or not:

status:

addresses: <omitted>

allocatable: <omitted>

capacity: <omitted>

conditions: <omitted>

active: <-- must exist

configMap:

kubeletConfigKey: kubelet

name: node-config

namespace: default

resourceVersion: "1024519"

uid: a8b4da9d-0b6e-478d-9e35-4a3696f5778c

assigned:

configMap:

kubeletConfigKey: kubelet

name: node-config

namespace: default

resourceVersion: "1024519"

uid: a8b4da9d-0b6e-478d-9e35-4a3696f5778c

lastKnownGood:

configMap:

kubeletConfigKey: kubelet

name: node-config

namespace: default

resourceVersion: "1024519"

uid: a8b4da9d-0b6e-478d-9e35-4a3696f5778c

You must check that status.conditions.active fields exist, and there is no error field in status.conditions.assigned. If an error occured when applying the specified configuration, the Kubelet restarts with status.conditions.lastKnownGood config hence status.conditions.active.configMap.resourceVersion may different from that in status.conditions.assigned.

If an error occured, a systemd journal log indicates what would be an error:

## This error is logged when you missed data.kubelet.kind.

Aug 24 13:16:04 localhost.worker kubelet[278409]: E0824 13:16:04.368361 278409 controller.go:151] kubelet config controller: failed to load config, see Kubelet log for details, error: failed to decode: Object 'Kind' is missing in '{

Also, my configuration contains resource restriction; it is applied as intended:

$ kubectl get nodes ${NODE_NAME} -o yaml

status:

allocatable:

cpu: "1"

ephemeral-storage: "66100978374"

hugepages-1Gi: "0"

hugepages-2Mi: "0"

memory: 4775280Ki

pods: "110"

capacity:

cpu: "4"

ephemeral-storage: 71724152Ki

hugepages-1Gi: "0"

hugepages-2Mi: "0"

memory: 8023408Ki

pods: "110"

In my configuration, I restricted CPU and memory resource, so be sure to check whether it actually is applied to Linux cgroup:

/sys/fs/cgroup/memory/kubepods.slice $ cat memory.limit_in_bytes

4994744320 // Similar to allocatable memory 4775280Ki

Authentication and Authorization #

During the setup, the Kubelet queries a ConfigMap object from the apiserver, which requires authentication. According to 1, the node authorizer now automatically configures an RBAC rules so that there is nothing to do.

Previously, you were required to manually create RBAC rules to allow Nodes to access their assigned ConfigMaps. The Node Authorizer now automatically configures these rules.

Node authorizor means that default authorization mode contains Node ^[node-auth]:

SystemReservedEnforcementKey

ps -ef | grep kube-apiserver

root 91113 91100 2 Aug19 ? 02:29:37 kube-apiserver ... --authorization-mode=Node,RBAC

so that any users in the system:nodes group (username system:node:<nodeName>) are allowed to:

- read services, endpoints, nodes, pods, secrets, configmaps, and persistent volumes (bound to the Kubelet’s node)

- write nodes (+status), pods (+status), and events.

Dynamic Kubelet configuration requires permission for (1) read configmaps, and (2) write node status.

Note that getting an object and listing objects are different.

kubectl get configmaps node-configshould be allowed, whilekubectl get configmaps(get all configmaps, which means listing configmaps) are still denied.