Configuring NVIDIA BlueField2 SmartNIC

Table of Contents

SmartNIC is a new emerging hardware where a NIC with general-purpose CPU cores. NVIDIA BlueField2 equips 8 ARM Cortex A-72 cores, which can be used to process offloaded functions. This functions are not limited to packet processing, but can also be more complicated applications, e.g., file system, etc.

This post talks about how to configure NVIDIA BlueField2 SmartNIC, on CloudLab r7525 machines.

Initial Setup #

First, install Mellanox OFED device drivers from here. Current latest version is 5.5-1.0.3.2 and LTS version is 4.9-4.1.7.0, but I used 5.4-3.1.0.0 version.

Once you untar the archive, run mlnxofedinstall to install:

$ sudo ./mlnxofedinstall --without-fw-update

...

$ sudo /etc/init.d/openibd restart

Unloading HCA drvier: [ OK ]

Loading HCA driver and Access Layer: [ OK ]

Now, you will see a new network interface tmfifo_net0, managed by rshim host driver. You can access BlueField via this interface.

NVIDIA and Mellanox explains BlueField can be accessed via rshim USB and rshim PCIe, which are managed by kernel modules named

rshim_usb.koandrshim_pcie.ko, respectively, according to the Section 2.7 of the following manual. But it seems to be changed, and there is no kernel modules installed namedrshim*and just a process/usr/sbin/rhsimis used, managed by systemd.$ lsmod | grep -i rshim (nothing) $ modinfo rshim modinfo: ERROR: Module rshim not found. $ systemctl status rshim ● rshim.service - rshim driver for BlueField SoC Loaded: loaded (/lib/systemd/system/rshim.service; enabled; vendor preset: enabled) Active: active (running) since Thu 2022-01-06 10:31:28 EST; 11h ago Docs: man:rshim(8) Main PID: 465131 (rshim) Tasks: 6 (limit: 618624) Memory: 2.8M CGroup: /system.slice/rshim.service └─465131 /usr/sbin/rshim ...

the source of which is open in here.

The other endpoint of tmfifo_net0 is in BlueField, and its IPv4 address is 192.168.100.2/24, hence you can use ssh to access BlueField via this address. First, we need to assign an address.

$ ip addr add 192.168.100.1/24 dev tmfifo_net0

$ ping 192.168.100.2 # check whether it is properly configured

PING 192.168.100.2 (192.168.100.2) 56(84) bytes of data.

64 bytes from 192.168.100.2: icmp_seq=1 ttl=64 time=0.821 ms

...

$ ssh ubuntu@192.168.100.2

ubuntu@192.168.100.2's password: ubuntu

By default username and password are both

ubuntu.

You are now in Linux running on BlueField2 ARM CPU.

$ lscpu

Architecture: aarch64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 8

On-line CPU(s) list: 0-7

Thread(s) per core: 1

Core(s) per socket: 8

Socket(s): 1

NUMA node(s): 1

Vendor ID: ARM

Model: 0

Model name: Cortex-A72

...

Configuring Embedded CPU Function Ownership (ECPF) Mode #

NVIDIA BlueField2 SmartNIC has three modes of operation as of now 1:

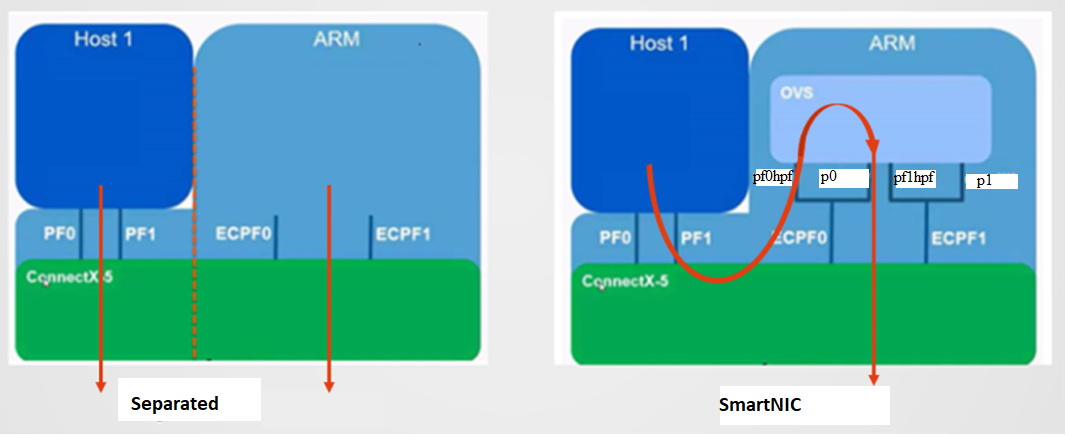

- Separated host mode (symmetric model)

- Embedded function (ECPF)

- Restricted mode

To check which mode a SmartNIC is running on, use the following command in the host:

$ mst start

$ mst status -v # identify the MST device

$ mlxconfig -d /dev/mst/mtXXXXX_pciconf0 q | grep -i internal_cpu_model

INTERNAL_CPU_MODEL EMBEDDED_CPU(1)

If you see SEPARATED_HOST(0), you can change it by:

$ mlxconfig -d /dev/mst/mtXXXXX_pciconf0 s INTERNAL_CPU_MODEL=1

$ mlxconfig -d /dev/mst/mtXXXXX_pciconf0.1 s INTERNAL_CPU_MODEL=1

$ # restart the server

In separated host mode, the host CPU and ARM CPU are like separate nodes, but sharing the same NIC. In ECPF mode, however, all communications between the host server and the outer world go through the SmartNIC ARM. For more detailed information, refer to this post2.

NVIDIA document says ECPF mode is a default (Two years ago Mellanox said separated mode was a default mode. Not sure which one is actual), but anyway I will use ECPF mode.

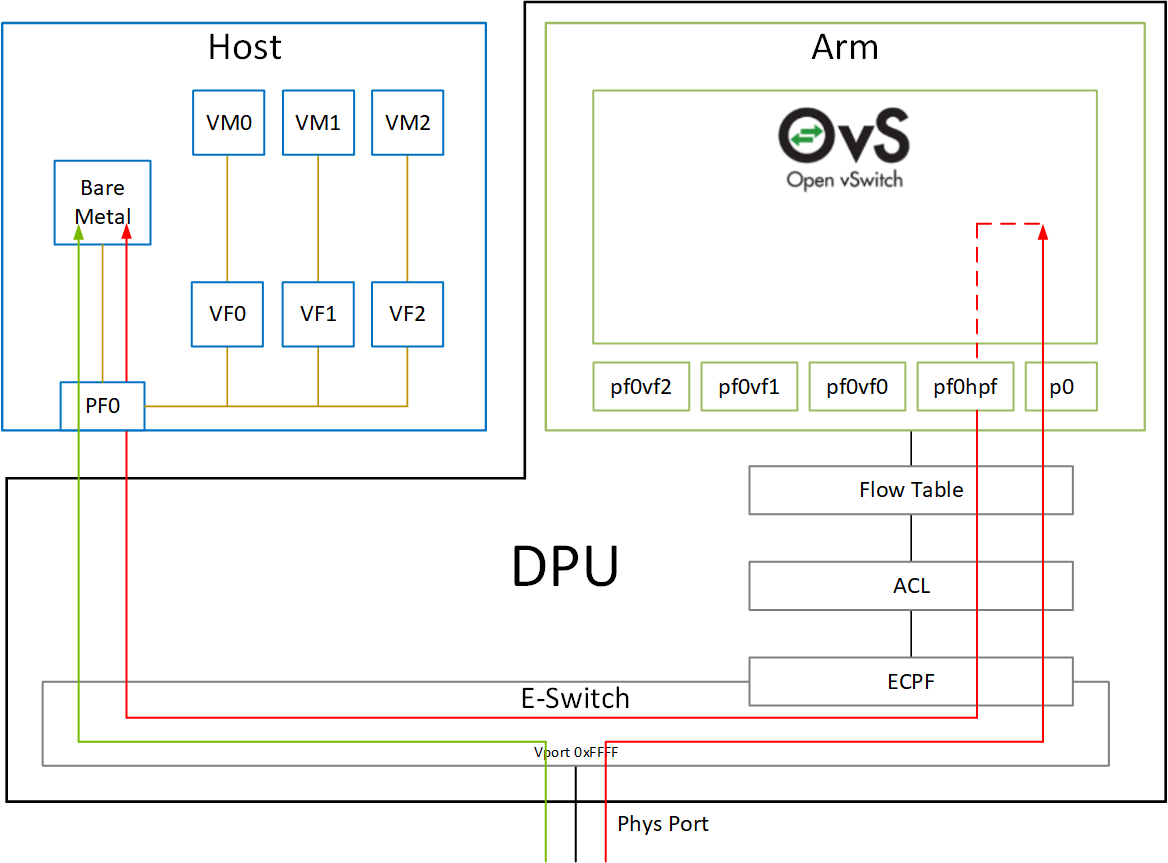

When in ECPF ownership mode, BlueField uses netdev representors to map each one of the host side physical and virtual functions 3. You can see a lot of network interfaces in BlueField:

$ ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: tmfifo_net0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

link/ether 00:1a:ca:ff:ff:01 brd ff:ff:ff:ff:ff:ff

3: oob_net0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc fq_codel state DOWN mode DEFAULT group default qlen 1000

link/ether 0c:42:a1:a4:89:ea brd ff:ff:ff:ff:ff:ff

4: p0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP mode DEFAULT group default qlen 1000

link/ether 0c:42:a1:a4:89:e4 brd ff:ff:ff:ff:ff:ff

5: p1: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq master ovs-system state DOWN mode DEFAULT group default qlen 1000

link/ether 0c:42:a1:a4:89:e5 brd ff:ff:ff:ff:ff:ff

6: pf0hpf: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP mode DEFAULT group default qlen 1000

link/ether d2:d4:df:81:68:1f brd ff:ff:ff:ff:ff:ff

7: pf1hpf: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP mode DEFAULT group default qlen 1000

link/ether c6:ee:a2:c6:fa:e2 brd ff:ff:ff:ff:ff:ff

8: en3f0pf0sf0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP mode DEFAULT group default qlen 1000

link/ether fe:18:2b:c7:14:b8 brd ff:ff:ff:ff:ff:ff

9: enp3s0f0s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DEFAULT group default qlen 1000

link/ether 02:9e:0f:d4:58:dc brd ff:ff:ff:ff:ff:ff

10: en3f1pf1sf0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP mode DEFAULT group default qlen 1000

link/ether fe:1f:d8:0d:c7:f1 brd ff:ff:ff:ff:ff:ff

11: enp3s0f1s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DEFAULT group default qlen 1000

link/ether 02:fb:ba:ee:89:92 brd ff:ff:ff:ff:ff:ff

12: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether ca:e4:08:3d:0f:fc brd ff:ff:ff:ff:ff:ff

13: ovsbr1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 0c:42:a1:a4:89:e4 brd ff:ff:ff:ff:ff:ff

14: ovsbr2: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

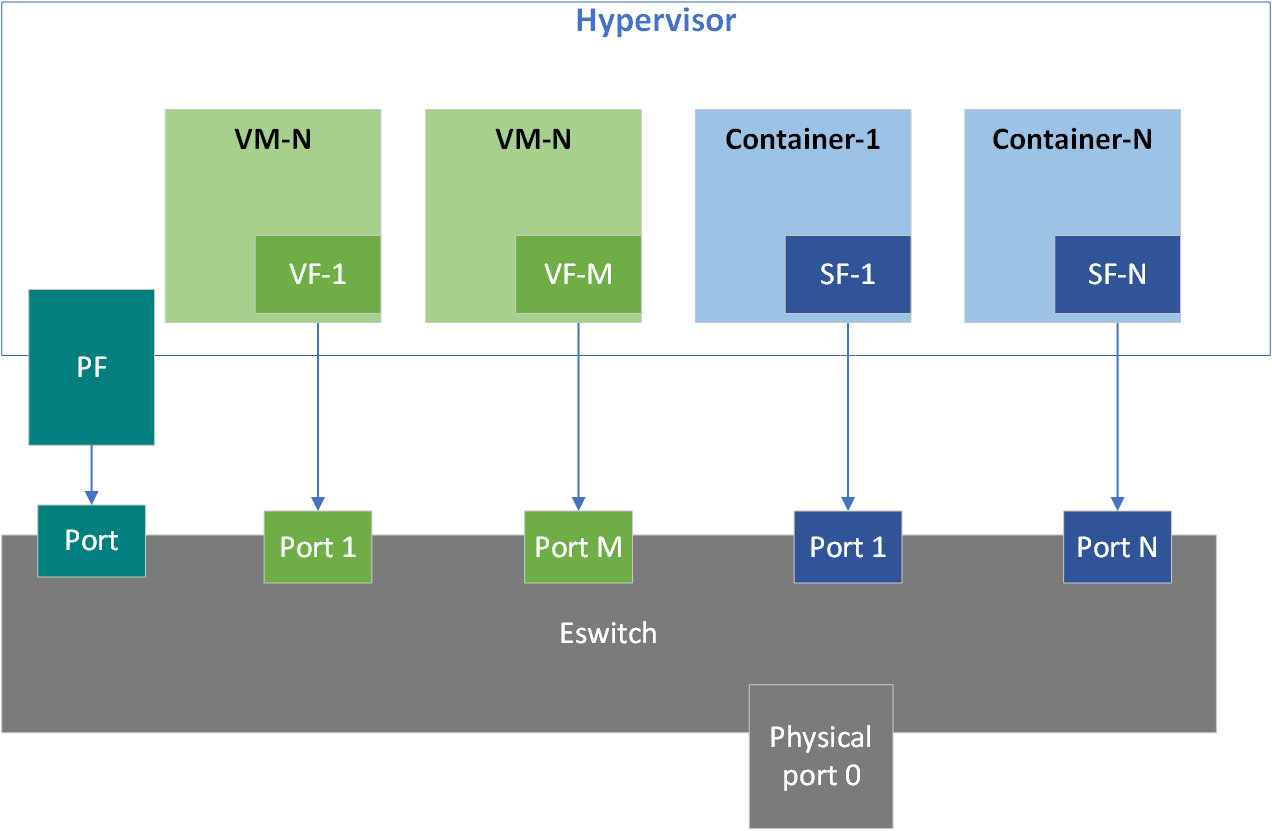

The naming convention for the representors is as follows, according to the DOCA documentation 3:

- Uplink representors: p<portnum>

- PF rerpesentors: pf<portnum>hpf

- VF representors: pf<portnum>vf<funcnum>

As mentioned before, all communications from the host go through SmartNIC ARM cores, and the virtual switch in Open vSwitch running on the ARM cores allows it.

$ systemctl status openvswitch-switch.service

● openvswitch-switch.service - LSB: Open vSwitch switch

Loaded: loaded (/etc/init.d/openvswitch-switch; generated)

Active: active (exited) since Wed 2021-07-21 19:00:40 UTC; 13h ago

Docs: man:systemd-sysv-generator(8)

Process: 2374 ExecStart=/etc/init.d/openvswitch-switch start (code=exited, status=0/SUCCESS)

...

$ ovs-vsctl show

Bridge ovsbr1

Port pf0hpf

Interface pf0hpf

Port ovsbr1

Interface ovsbr1

type: internal

Port en3f0pf0sf0

Interface en3f0pf0sf0

Port p0

Interface p0

Bridge ovsbr2

Port p1

Interface p1

Port en3f1pf1sf0

Interface en3f1pf1sf0

Port ovsbr2

Interface ovsbr2

type: internal

Port pf1hpf

Interface pf1hpf

ovs_version: "2.14.1"

NVIDIA BlueField2 supports OVS offloading using NVIDIA ASAP2 (Accelerated Switching and Packet Processing) technology. Refer to this for more information.

Note that there is no VF representors in my environment; instead, it has SF representors. First I thought it is a subfunction, but it is a unique name called scalable function that NVIDIA uses only for BlueField-2 DPUs 4.

Configuring SmartNIC Network Connectivity #

As of now, Linux on SmartNIC cannot access to another SmartNIC on another machine, nor even to the host except through rshim layer.

Host Connection #

As I don’t use a DHCP server, I should manually assign IPv4 addresses to SF, according to the manual.

# on the SmartNIC Linux

$ ovs-vsctl show

Bridge ovsbr1

Port en3f0pf0sf0

Interface en3f0pf0sf0

...

$ ip link

8: en3f0pf0sf0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP group default qlen 1000

link/ether fe:18:2b:c7:14:b8 brd ff:ff:ff:ff:ff:ff

9: enp3s0f0s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 02:9e:0f:d4:58:dc brd ff:ff:ff:ff:ff:ff

...

$ ip addr add 192.168.10.2/24 dev enp3s0f0s0

# on the host Linux

$ ip link

...

15: ens5f0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 0c:42:a1:a4:89:e0 brd ff:ff:ff:ff:ff:ff

$ ip addr add 192.168.10.1/24 dev ens5f0

Refer to this answer to find out why there are two similar network interfaces:

There are two representors for each one of the DPU’s network ports: one for the uplink, and another one for the host side PF (the PF representor created even if the PF is not probed on the host side).

If you check

mlnx-sf -a showon the DPU you’ll see the kernel netdev and the representor names for the DPU itself. TheRepresentor netdevdevices are already connected (added to) the OVS bridge by default, so we only need to use netplan to assign an IP address toenp3s0f1s0orenp3s0f0s0(or both) and that should work.

Note that the following is my result of mlnx-sf -a show run on SmartNIC Linux:

$ mlnx-sf -a show

SF Index: pci/0000:03:00.0/229408

Parent PCI dev: 0000:03:00.0

Representor netdev: en3f0pf0sf0

Function HWADDR: 02:9e:0f:d4:58:dc

Auxiliary device: mlx5_core.sf.2

netdev: enp3s0f0s0

RDMA dev: mlx5_2

SF Index: pci/0000:03:00.1/294944

Parent PCI dev: 0000:03:00.1

Representor netdev: en3f1pf1sf0

Function HWADDR: 02:fb:ba:ee:89:92

Auxiliary device: mlx5_core.sf.3

netdev: enp3s0f1s0

RDMA dev: mlx5_3

That’s why I put an IP address to the device enp3s0f0s0, not en3f0pf0sf0.

Now it is possible to ping each other.

# on the SmartNIC Linux

$ ping 192.168.10.1 -c 2

PING 192.168.10.1 (192.168.10.1) 56(84) bytes of data.

64 bytes from 192.168.10.1: icmp_seq=1 ttl=64 time=51.8 ms

64 bytes from 192.168.10.1: icmp_seq=2 ttl=64 time=0.114 ms

# on the host Linux

$ ping 192.168.10.2 -c 2

PING 192.168.10.2 (192.168.10.2) 56(84) bytes of data.

64 bytes from 192.168.10.2: icmp_seq=1 ttl=64 time=33.7 ms

64 bytes from 192.168.10.2: icmp_seq=2 ttl=64 time=0.143 ms

Connecting Multiple Nodes #

If host PFs are in the same subnet, they are reachable as well. For example,

- Node 1 host:

ens5f0: 192.168.10.1/24 - Node 1 SmartNIC:

enp3s0f0s0: 192.168.10.2/24 - Node 2 host:

ens5f0: 192.168.10.3/24 - Node 2 SmartNIC:

enp3s0f0s0: 192.168.10.4/24

Ping from 1 to 2 (host to SmartNIC in the same machine):

$ ping 192.168.10.2 -c 2

PING 192.168.10.2 (192.168.10.2) 56(84) bytes of data.

64 bytes from 192.168.10.2: icmp_seq=1 ttl=64 time=15.5 ms

64 bytes from 192.168.10.2: icmp_seq=2 ttl=64 time=0.133 ms

Ping from 1 to 3 (host to another host):

$ ping 192.168.10.3 -c 2

PING 192.168.10.3 (192.168.10.3) 56(84) bytes of data.

64 bytes from 192.168.10.3: icmp_seq=1 ttl=64 time=94.4 ms

64 bytes from 192.168.10.3: icmp_seq=2 ttl=64 time=0.078 ms

Ping from 1 to 4 (host to SmartNIC in another machine):

$ ping 192.168.10.4 -c 2

PING 192.168.10.4 (192.168.10.4) 56(84) bytes of data.

64 bytes from 192.168.10.4: icmp_seq=1 ttl=64 time=0.403 ms

64 bytes from 192.168.10.4: icmp_seq=2 ttl=64 time=25.6 ms

Ping from 2 to 3 (SmartNIC to another host):

$ ping 192.168.10.3 -c 2

PING 192.168.10.3 (192.168.10.3) 56(84) bytes of data.

64 bytes from 192.168.10.3: icmp_seq=1 ttl=64 time=97.2 ms

64 bytes from 192.168.10.3: icmp_seq=2 ttl=64 time=0.087 ms

Ping from 2 to 4 (SmartNIC to another SmartNIC):

$ ping 192.168.10.4 -c 2

PING 192.168.10.4 (192.168.10.4) 56(84) bytes of data.

64 bytes from 192.168.10.4: icmp_seq=1 ttl=64 time=0.217 ms

64 bytes from 192.168.10.4: icmp_seq=2 ttl=64 time=0.187 ms

Enable NAT for Network in SmartNIC Linux #

In CloudLab configuration, only eno1 interface in the host is connected to the Internet. Hence, SmartNIC Linux needs to go through the host Linux to access the Internet.

This post well explains how to setup NAT between them 5, so I just summarized the way here.

# On the host

$ echo 1 | sudo tee /proc/sys/net/ipv4/ip_forward

$ iptables -t nat -A POSTROUTING -o eno1 -j MASQUERADE

# In case IP forwarding is not working

$ iptables -A FORWARD -o eno1 -j ACCEPT

$ iptables -A FORWARD -m state --state ESTABLISHED,RELATED -i eno1 - j ACCEPT

# On the SmartNIC

$ echo "nameserver 8.8.8.8" | sudo tee /etc/resolv.conf

# On the SmartNIC

$ ping google.com -c 2

PING google.com (74.125.21.100) 56(84) bytes of data.

64 bytes from yv-in-f100.1e100.net (74.125.21.100): icmp_seq=1 ttl=108 time=7.19 ms

64 bytes from yv-in-f100.1e100.net (74.125.21.100): icmp_seq=2 ttl=108 time=6.93 ms

-

NVIDIA BlueField DPU Family Software Documentation: DPU Operation ↩︎

-

NVIDIA Mellanox Bluefield-2 SmartNIC Hands-on Tutorial Part 2: Change Mode of Operation and Install DPDK ↩︎

-

NVIDIA BlueField DPU OS Platform Documentation: Scalable Functions ↩︎

-

NVIDIA Mellanox Bluefield-2 SmartNIC Hands-on Tutorial Part 1: Install Drivers and Access the SmartNIC ↩︎