Accelerating Ceph RPM Packaging: Using Multithreaded Compression

Table of Contents

This post explains how we can accelerate buildig a Ceph RPM package. Knowledge in the post can be generally applied to packaging all other applications, not only Ceph.

Ceph source code is managed by Github 1, and it contains several shell scripts for packaging as well. Before illustrating how these scripts work, we have to figure out how RPM packaging works.

1. RPM Packaing 101 #

RPM (originally stands for Red Hat Package Manager) is a package management system developed by Red Hat 2. Many Linux distributions such as Red Hat Enterprise Linux (RHEL), Fedora, CentOS, OpenSUSE are using RPM as their default package management system.

Red Hat provides a very well written guide document that how we can package RPMs 3. This post provides very simplified illustration. For more detail, please refer to the document.

$ dnf install rpm-build rpmdevtools

$ rpmdev-setuptree

$ rpmbuild <options>

rpmdev-setuptreecreates a basic directory structure in your home directory:/home/user/rpmbuild/{BUILD,RPMS,SOURCES,SPECS,SRPMS}.rpmbuildbuilds a RPM package based on data in/home/user/rpmbuild. Generated RPM packages are stored in/home/user/RPMS.

Details for each directory are explained in the document.

2. Building a Ceph RPM Package #

rpmbuild accepts three ways to build a RPM Package: using a spec file (e.g. ceph.spec), using source files (tarball archive), or using a SRPM (Source RPM) package.

$ rpmbuild --help

Usage: rpmbuild [OPTION...]

Build options with [ <specfile> | <tarball> | <source package> ]:

-bb build binary package only from <specfile> # use specfile

-bs build source package only from <specfile>

-rb build binary package only from <source package> # use SRPM

-rs build source package only from <source package>

-tb build binary package only from <tarball> # use source files

-ts build source package only from <tarball>

...

Using SPEC file #

I will not use RPM package with spec file, because in ceph.spec it downloads archived source code from website, but I wanted custom build.

ceph.spec:

...

%global _remote_tarball_prefix https://download.ceph.com/tarballs/ <<---

...

#################################################################################

# main package definition

#################################################################################

Name: ceph

Version: 16.0.0

Release: 6971.g6352099561%{?dist}

%if 0%{?fedora} || 0%{?rhel}

Epoch: 2

%endif

# define _epoch_prefix macro which will expand to the empty string if epoch is

# undefined

%global _epoch_prefix %{?epoch:%{epoch}:}

Summary: User space components of the Ceph file system

License: LGPL-2.1 and LGPL-3.0 and CC-BY-SA-3.0 and GPL-2.0 and BSL-1.0 and BSD-3-Clause and MIT

%if 0%{?suse_version}

Group: System/Filesystems

%endif

URL: http://ceph.com/

Source0: %{?_remote_tarball_prefix}ceph-16.0.0-6971-g6352099561.tar.bz2 <<---

...

This

ceph.specfile is automatically generated fromceph.spec.inbymake-distcommand.You can use

./make-dist <version_name>to customize the version. By default, it usesgit describe --log --match 'v*' | se d's/^v//'for version name.version=$1 [ -z "$version" ] && version=$(git describe --long --match 'v*' | sed 's/^v//') if expr index $version '-' > /dev/null; then rpm_version=$(echo $version | cut -d - -f 1-1) rpm_release=$(echo $version | cut -d - -f 2- | sed 's/-/./') else rpm_version=$version rpm_release=0 fi ... # populate files with version strings echo "including src/.git_version, ceph.spec" (git rev-parse HEAD ; echo $version) 2> /dev/null > src/.git_version for spec in ceph.spec.in; do cat $spec | sed "s/@PROJECT_VERSION@/$rpm_version/g" | sed "s/@RPM_RELEASE@/$rpm_release/g" | sed "s/@TARBALL_BASENAME@/ceph-$version/g" > `echo $spec | sed 's/.in$//'` done

By default, packaging a Ceph RPM with ceph.spec downloads archived source code from https://download.ceph.com/tarballs/ceph-<version>.tar.bz2, most of which are not provided since 2016 (Recently, they only provides tar.gz, not tar.bz2).

It also requires customized archived source code to be uploaded to the server, preventing cloned source code to be used for RPM package build.

Therefore, I will not use ceph.spec for RPM package build.

You can still use SPEC file with your source code, not tarballs, but it requires modification on the SPEC file, which I do not want. Please refer to here for more details.

Using tarball #

Ceph provides a script make-dist, which automatically generates tar.bz2 tarball.

After generating a tarball, we can build a RPM package with it by rpmbuild -tb /path/to/ceph/tarball.tar.bz2.

However, it returns an error that is not understandable:

error: Found more than one spec file in ceph-16.0.0-6971-g6352099561.tar.bz2

I currently have no idea how to solve it. Instead, I use SRPM.

Using SRPM (Source RPM). #

Ceph also provides a script make-srpm.sh to generate a SRPM package.

It first uses make-dist to build a tarball, and then build a SRPM based on it and ceph.spec.

When the script is done, the result is as follows:

/ceph $ ls

...

ceph-16.0.0-6971-g6352099561.tar.bz2 # This is a tarball generated by make-dist.

ceph-16.0.0-6971.g6352099561.el8.src.rpm # This is a SRPM package generated by make-srpm.sh.

Simply using rpmbuild, we can build a Ceph RPM package based on the SRPM.

$ ./make-srpm.sh

$ rpmbuild --rebuild ceph-16.0.0-6971.g6352099561.el8.src.rpm

I will use the SRPM for packaging a Ceph RPM.

Technically, it is not different from using the SPEC file. If you manually copies the tarballs (ceph-16.0.0-6971-g6352099561.tar.bz2) into

~/rpmbuild/SOURCES,rpmbuilddoes not try to download the tarballs from the remote repository.Using the SRPM seems to be exactly the same with the following:

/path/to/ceph $ ./make-dist /path/to/ceph $ cp ceph-16.0.0-6971-g6352099561.tar.bz2 ~/rpmbuild/SOURCES /path/to/ceph $ cp ceph.spec ~/rpmbuild/SPECS /path/to/ceph $ rpmbuild -bb ~/rpmbuild/SPECS/ceph.spec

3. Accelerating RPM Package Build #

The manual explains well what rpmbuild does during rpmbuild --rebuild:

- Install the contents of the SRPM (the SPEC file and the source code) into

~/rpmbuild/directory (SPEC –>~/rpmbuild/SPECS, source code tarball –>~/rpmbuild/SOURCES) - Build using the installed contents.

- Remove the SPEC file and the source code.

To build the contents, rpmbuild uses instructions in the %build section in the SPEC file.

%build

...

mkdir build

cd build

CMAKE=cmake

${CMAKE} .. \

-DCMAKE_INSTALL_PREFIX=%{_prefix} \

-DCMAKE_INSTALL_LIBDIR=%{_libdir} \

-DCMAKE_INSTALL_LIBEXECDIR=%{_libexecdir} \

-DCMAKE_INSTALL_LOCALSTATEDIR=%{_localstatedir} \

-DCMAKE_INSTALL_SYSCONFDIR=%{_sysconfdir} \

-DCMAKE_INSTALL_MANDIR=%{_mandir} \

-DCMAKE_INSTALL_DOCDIR=%{_docdir}/ceph \

-DCMAKE_INSTALL_INCLUDEDIR=%{_includedir} \

-DCMAKE_INSTALL_SYSTEMD_SERVICEDIR=%{_unitdir} \

-DWITH_MANPAGE=ON \

-DWITH_PYTHON3=%{python3_version} \

-DWITH_MGR_DASHBOARD_FRONTEND=OFF ...

%if %{with cmake_verbose_logging}

cat ./CMakeFiles/CMakeOutput.log

cat ./CMakeFiles/CMakeError.log

%endif

make "$CEPH_MFLAGS_JOBS"

%if 0%{with make_check}

%check

# run in-tree unittests

cd build

ctest "$CEPH_MFLAGS_JOBS"

%endif

where $CEPH_MFLAGS_JOBS is defined -j$CEPH_SMP_NCPUS, which is carefully set for parallel build, which would not paralyze the entire system:

# Parallel build settings ...

CEPH_MFLAGS_JOBS="%{?_smp_mflags}"

CEPH_SMP_NCPUS=$(echo "$CEPH_MFLAGS_JOBS" | sed 's/-j//')

%if 0%{?__isa_bits} == 32

# 32-bit builds can use 3G memory max, which is not enough even for -j2

CEPH_SMP_NCPUS="1"

%endif

# do not eat all memory

echo "Available memory:"

free -h

echo "System limits:"

ulimit -a

if test -n "$CEPH_SMP_NCPUS" -a "$CEPH_SMP_NCPUS" -gt 1 ; then

mem_per_process=2500

max_mem=$(LANG=C free -m | sed -n "s|^Mem: *\([0-9]*\).*$|\1|p")

max_jobs="$(($max_mem / $mem_per_process))"

test "$CEPH_SMP_NCPUS" -gt "$max_jobs" && CEPH_SMP_NCPUS="$max_jobs" && echo "Warning: Reducing build parallelism to -j$max_jobs because of memory limits"

test "$CEPH_SMP_NCPUS" -le 0 && CEPH_SMP_NCPUS="1" && echo "Warning: Not using parallel build at all because of memory limits"

fi

export CEPH_SMP_NCPUS

export CEPH_MFLAGS_JOBS="-j$CEPH_SMP_NCPUS"

Therefore, building phase is parallelized, and not a problem.

However, as the final step, rpmbuild builds a package with built binaries and libraries with compression.

By default, rpmbuild uses level 2 XZ compression to build a RPM package, which only uses one core.

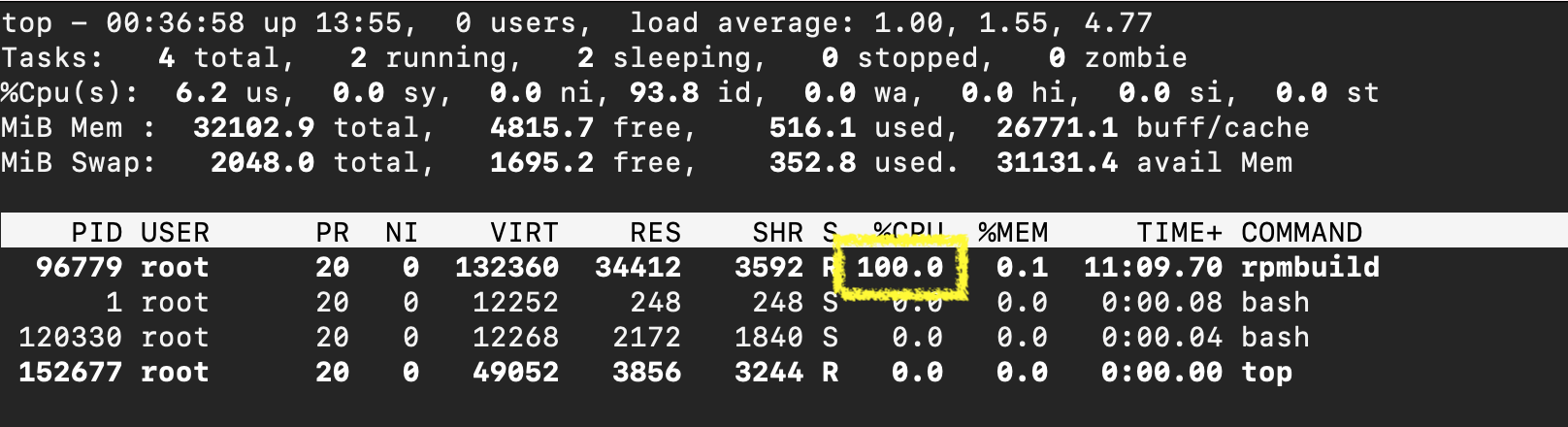

rpmbuild only uses one core for packaging step. This becomes a huge bottleneck for Ceph RPM packaging. Current rpmbuild version is 4.14.2.You can check which algorithm is currently set for

rpmbuildwithrpmbuild --showrc:$ rpmbuild --showrc ... -13: _binary_payload w2.xzdio ...You can refer to

/usr/lib/rpm/macrosthat which options are available and their meaning.$ cat /usr/lib/rpm/macros ... # Compression type and level for source/binary package payloads. # "w9.gzdio" gzip level 9 (default). # "w9.bzdio" bzip2 level 9. # "w6.xzdio" xz level 6, xz's default. # "w7T16.xzdio" xz level 7 using 16 thread (xz only) # "w6.lzdio" lzma-alone level 6, lzma's default ...

According to the explanation, w2.xzdio means level 2 XZ compression type.

There is a well done analysis4 of compression algorithms. According to the analysis, default xz with single core was unbearably slow.

The reason that maintainers were reluctant to adapt multithreaded compression seems memory usage. Refer to this mail archive.

Now you can override this rpmbuild variable with --define option.

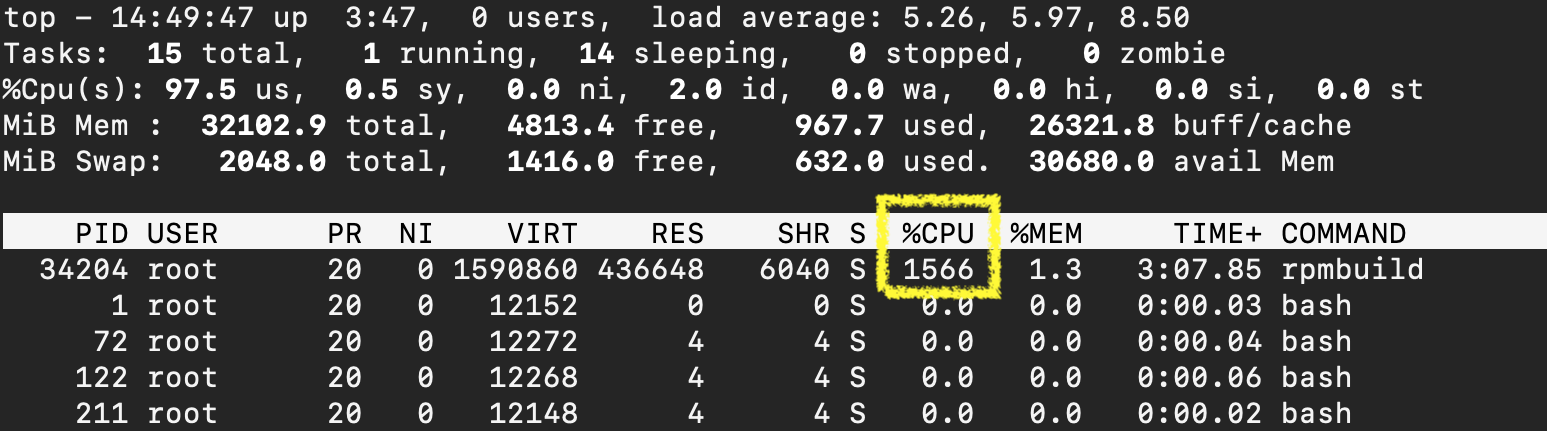

I want to change it to use just multithreading. Then compression type must be w2T16.xzdio (xz level 2 using 16 threads. I am using AMD Ryzen 2700X, which has 16 threads total).

$ rpmbuild --define "_binary_payload w2T16.xzdio" --rebuild ceph-16.0.0-6971.g6352099561.el8.src.rpm

$ <output omitted>

rpmbuild with the overriden _binary_payload uses nearly all 16 cores.The entire rpmbuild time change measured with time command is as follows:

- Without multithreading compression: 85m 3.681s

- With multithreading compression (16 threads): 65m40.709s (-22.8%)

Note, that the elapsed time includes the entire process; even includes compile and link time, which takes a lot of portion of the entire build process. It is a huge improvement and I think it can be improved further!

4. Conclusion #

I accelerated RPM packaging by using multithreaded compression using rpmbuild feature.

In August 2020, a pull request has been merged to the rpmbuild main branch.

It enables thread autodetection for automated parallel compression (xz and zstd compression type only).

I used rpmbuild 4.14.2 so I was not get any benefit from the commits, however, it would be good to try this as well.