Using Intel IOAT DMA

Table of Contents

I/OAT (I/O Acceleration Technology) 1 #

Intel I/OAT is a set of technologies for improving I/O performance. This post specifically illustrates how to use Intel QuickData Technology, which enables data copy by the chipset instead of the CPU, to move data more efficiently and provide fast throughput.

Using Linux DMA Engine #

I/OAT (specifically QuickData Technology) is implemented as ioatdma kernel module in Linux, and integrated into the Linux DMA subsystem.

As it is managed by the Linux DMA subsystem, it does not have its own interface but should be accessed via Linux DMA engine APIs 2.

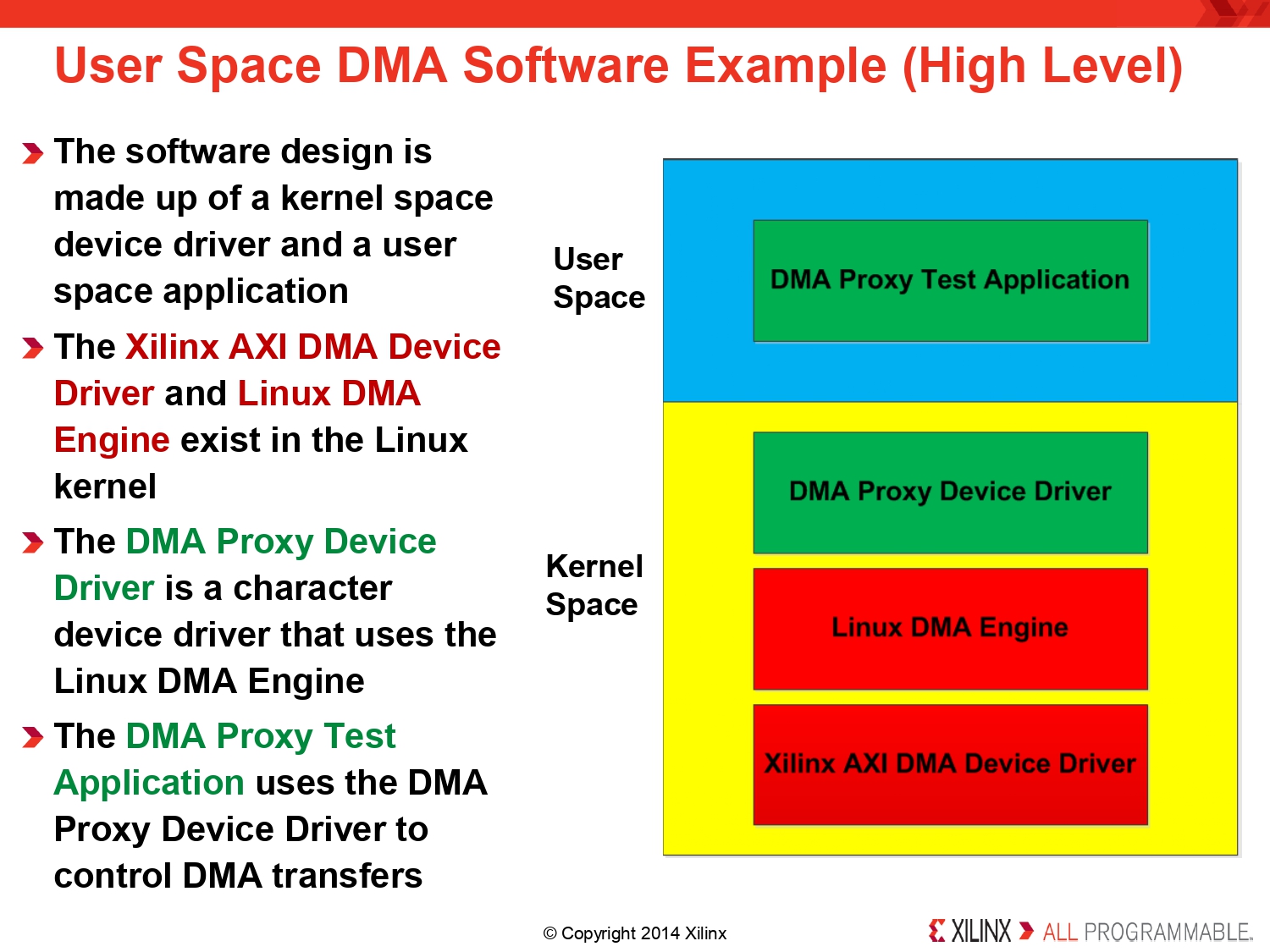

There are a lot of examples, specifically for Xilinx AXIDMA; one is here. There is also a manual from Xilinx 3.

Here, we need to implement DMA proxy device driver to provide DMA service to userspace processes, or DMA engine client device driver. A guide for DMA engine client is in [here] and [here (async TX)].

1. Allocating a DMA slave channel[source] #

// @dev: pointer to client device structure

// @name: slave channel name

struct dma_chan *dma_request_chan(struct device *dev, const char *name);

struct dma_Chan *dma_request_chan_by_mask(const dma_cap_mask_t *mask);

While Xilinx AXIDMA code uses

dma_request_slave_channel(), it is recommended to usedma_request_chan(): it returns a channel based ondma_slave_mapmatching table.

Linux DMA subsystem internally manages dma_device_list and automatically find a proper DMA device.

Personally, I use dma_request_chan_by_mask (have no idea how to use dma_request_chan). The following code gets a struct dma_chan for DMA_MEMCPY functionality.

#include <linux/dmaengine.h>

dma_cap_mask_t mask;

struct dma_chan *chan = NULL;

dma_cap_zero(mask);

dma_cap_set(DMA_MEMCPY, mask);

chan = dma_request_chan_by_mask(&mask);

if (IS_ERR_OR_NULL(chan)) { /* handle error */ }

...

2. Preparing DMA transfer #

After creating a channel, we prepare DMA transfer.

First, map pages/memory regions for DMA to get dma_attr_t, which contains a BUS address:

// Used to map kernel virtual address

dma_addr_t dma_map_single(struct device *dev, void *ptr,

size_t size, enum dma_data_direction dir);

// Used to map struct page

dma_addr_t dma_map_page(struct device *dev, struct page *page,

size_t offset, size_t size, enum dma_data_direction dir);

// Used to map physical address

dma_addr_t dma_map_resource(struct device *dev, phys_addr_t phys_addr,

size_t size, enum dma_data_direction dir);

All examples use

kmalloc()to get a memory buffer for DMA. It does not have to be a kernel memory (and the size limit is way low); instead, we can use CMA (Contiguous Memory Allocator) 4 or device physical address (e.g. using Non Volatile Memory and/ordevdax).

struct page *page = /* get page */;

size_t src_offset = 0x1000;

size_t dst_offset = 0x100000;

size_t size = 0x3000;

dma_addr_t src = dma_map_page(chan->device->dev, page, src_offset, size, DMA_TO_DEVICE);

dma_addr_t dst = dma_map_page(chan->device->dev, page, dst_offset, size, DMA_FROM_DEVICE);

The first argument (struct device *) refers to a DMA device, and struct dma_chan contains a device pointer (chan->device->dev) and you shoud always use it for the first argument.

A character device that is generated by this proxy device driver should not be used.

The last argument is a DMA direction; you can use DMA_BIDIRECTIONAL.

Although it is not clear, but what I understand is that DMA_TO_DEVICE means the data at the target address will be read by the DMA device, and DMA_FROM_DEVICE means the data at the target address will be written by the DMA device.

After mapping the buffer, we now get a descriptor for transaction.

struct dma_async_tx_descriptor *dmaengine_prep_slave_sg(

struct dma_chan *chan, struct scatterlist *sgl,

unsigned int sg_len, enum dma_data_direction direction,

unsigned long flags);

struct dma_async_tx_descriptor *dmaengine_prep_dma_cyclic(

struct dma_chan *chan, dma_addr_t buf_addr, size_t buf_len,

size_t period_len, enum dma_data_direction direction);

struct dma_async_tx_descriptor *dmaengine_prep_interleaved_dma(

struct dma_chan *chan, struct dma_interleaved_template *xt,

unsigned long flags);

struct dma_async_tx_descriptor *dmaengine_prep_dma_memcpy(

struct dma_chan *chan, dma_addr_t dest, dma_addr_t src,

size_t len, unsigned long flags);

...

There are several interface to getting a descriptor, but as of now I am not sure what is different. For now I use DMA_MEMCPY, I use dmaengine_prep_dma_memcpy() function.

struct dma_async_tx_descriptor *chan_desc;

enum dma_ctrl_flags flags = DMA_CTRL_ACK | DMA_PREP_INTERRUPT;

chan_desc = dmaengine_prep_dma_memcpy(chan, dst, src, size, flags);

if (IS_ERR_OR_NULL(chan_desc)) { /* handle error */ }

3. Configuring Completion Callback #

Before submitting a request, we first should configure completion: when a DMA is completed, a completion callback will be called.

static dma_callback(void *completion) {

complete(completion);

}

struct completion comp;

init_completion(&comp);

chan_desc->callback = dma_callback;

chan_desc->callback_param = ∁

4. Submitting a Request #

Submissison consists of two steps: (1) submit, and (2) issue pending requests.

dmaengine_submit() just pushes a request into the queue, and it will be not handled by the DMA engine until it is issued, which requires the second step with dma_async_issue_pending().

dma_cookie_t cookie;

cookie = dmaengine_submit(chan_desc);

dma_async_issue_pending(chan);

where dma_cookie_t is used to check the progress of DMA engine activity via other DMA engine calls, e.g.:

enum dma_status dma_async_is_tx_complete(struct dma_chan *chan,

dma_cookie_t cookie, dma_cookie_t *last, dma_cookie_t *used);

enum dma_status dma_async_is_complete(dma_cookie_t cookie,

dma_cookie_t last_complete, dma_cookie_t last_used);

enum dma_status dma_async_wait(struct dma_chan *chan, dma_cookie_t cookie);

enum dma_status dma_wait_for_async_tx(struct dma_tx_descriptor *tx);

5. Waiting for Completion #

Honestly, I am not sure what is different between dma_async_is_tx_complete() and dma_async_is_complete(). Need further investigation. I also saw that my reference uses wait_for_completion() instead of dma_async_wait(), and have no idea either what is difference between those two.

unsigned long timeout = wait_for_completion_timeout(&comp, msecs_to_jiffies(5000));

enum dma_status status = dma_async_is_tx_complete(chan, cookie, NULL, NULL);

if (timeout == 0) {

printk(KERN_WARNING "DMA timed out.\n");

} else if (status != DMA_COMPLETE) {

printk(KERN_INFO "DMA returned completion status of: %s\n",

status == DMA_ERROR ? "error" : "in progress");

} else {

printk(KERN_INFO "DMA completed!\n");

}

/* after that call dma_unmap_page to unmap the mapped pages */

Using SPDK 5 6 7 #

SPDK (Software Development Performance Kit) supports user-space device management and is usually used for low-latency high-performance NVMe devices, but can be also used for hig performance I/OAT DMA devices by eliminating kernel - user space context switches for DMA operations.

1. Initializing SPDK & Allocating I/OAT Channel #

To use SPDK, first we should initialize the SPDK environment using:

/* Initialize the default value of opts. */

void spdk_env_opts_init(struct spdk_env_opts *opts);

/* Initialize or reinitialize the environment library.

* For initialization, this must be called prior to using any other functions

* in this library.

*/

int spdk_env_init(const struct spdk_env_opts *opts);

struct spdk_env_opts opts;

spdk_env_opts_init(&opts);

/* modify opts.* as you want */

spdk_env_init(&opts);

And then, use spdk_ioat_probe() to probe I/OAT DMA devices. Before calling it, target I/OAT devices should be setup with UIO/VFIO with scripts/setup.sh src:

$ sudo scripts/setup.sh

/* Callback for spdk_ioat_probe() enumeration. */

typedef bool (*spdk_ioat_probe_cb)(void *cb_ctx, struct spdk_pci_device *pci_dev);

/**

* Callback for spdk_ioat_probe() to report a device that has been attached to

* the userspace I/OAT driver.

*/

typedef void (*spdk_ioat_attach_cb)(void *cb_ctx, struct spdk_pci_device *pci_dev,

struct spdk_ioat_chan *ioat);

/**

* Enumerate the I/OAT devices attached to the system and attach the userspace

* I/OAT driver to them if desired.

*/

int spdk_ioat_probe(void *cb_ctx, spdk_ioat_probe_cb probe_cb, spdk_ioat_attach_cb attach_cb);

spdk_ioat_probe() probes all I/OAT devices and calls probe_cb() callback function for each probed device, and also tries to attach the device into the userspace process with attach_cb(), if the corresponding probe_cb() returns true for the device.

struct spdk_ioat_chan *ioat_chan = NULL;

static bool probe_cb(void *cb_ctx, struct spdk_pci_deivce *pci_device) {

if (ioat_chan) {

return false;

} else {

return true;

}

}

static void attach_cb(volid *cb_ctx, struct spdk_pci_device *pci_device, struct spdk_ioat_chan *ioat) {

// Check if that device/channel supports copy operations

if (!(spdk_ioat_get_dma_capabilities(ioat) & SPDK_IOAT_ENGINE_COPY_SUPPORTED)) {

return;

}

ioat_chan = ioat;

printf("Attaching to the ioat device!\n");

}

if (spdk_ioat_probe(NULL, probe_cb, attach_cb)) { /* handle error */ }

probe_cb() returns true if there is no attached spdk_ioat_chan channel. Then spdk_ioat_probe() calls attach_cb() for the device that probe_cb returns true, then attach_cb() checks the memory copy capability and attach it.

2. DMA Transfer and Configuring Completion Callback #

SPDK requires to call spdk_ioat_build_copy() to build a DMA request, and then flush it into the device via spdk_ioat_flush(). You can use spdk_ioat_submit_copy() to do thw two at once for one request.

/* Signature for callback function invoked whan a request is completed. */

typedef void (*spdk_ioat_req_cb)(void *arg);

/**

* Build a DMA engine memory copy request (a descriptor).

* The caller must also explicitly call spdk_ioat_flush to submit the

* descriptor, possibly after building additional descriptors.

*/

int spdk_ioat_build_copy(struct spdk_ioat_chan *chan,

void *cb_arg, spdk_ioat_req_cb cb_fn,

void *dst, const void *src, uint64_t nbytes);

/* Flush previously built descriptors. */

void spdk_ioat_flush(struct spdk_ioat_chan *chan);

/* Build and submit a DMA engine memory copy request. */

int spdk_ioat_submit_copy(struct spdk_ioat_chan *chan,

void *cb_arg, spdk_ioat_req_cb cb_fn,

void *dst, const void *src, uint64_t nbytes);

spdk_ioat_build_copy() and spdk_ioat_submit_copy() receives void *cb_arg and spdk_ioat_req_cb cb_fn, the callback function to be called with a given argument pointer. The callback function will be called when a request is completed, and the userspace process can use it to determine when operation is done. Simple implementation can be:

bool copy_done = false;

static op_done_cb(void *arg) {

*(bool*)arg = true;

}

spdk_ioat_submit_copy(ioat_chan,

©_done, // optional

op_done_cb, // optional: if given, will be called by ioat_process_channel_events().

dst,

src,

io_size);

int result;

do {

result = spdk_ioat_process_events(ioat_chan);

} while (result == 0);

assert(copy_done); // must be true!

Note that the target buffer (both src and dst) must be DMAable; you should use spdk_mem_register():

/**

* Register the specified memory region for address translation.

* The memory region must map to pinned huge pages (2MB or greater).

*/

int spdk_mem_register(void *vaddr, size_t len);

void *dev_addr = mmap(NULL, device_size, PROT_READ | PROT_WRITE,

MAP_SHARED, fd, 0);

spdk_mem_register(dev_addr, device_size);

/* e.g. void *src = dev_addr + 0x1000; */

As the device is handled by a userspace process, not the kernel, the process cannot receive an interrupt but should poll the completion to check whether the submitted operation is completed.

spdk_ioat_process_events() is the function for this purpose.

/**

* Check for completed requests on an I/OAT channel.

* \return number of events handled on success, negative errno on failure.

*/

int spdk_ioat_process_events(struct spdk_ioat_chan *chan);

spdk_ioat_process_events() immediately returns how many requests are completed. You can use the returned value or copy_done variable in the example above to check whether the operation is completed.

Note that, op_done_cb() callback will be called by spdk_ioat_process_events():

int spdk_ioat_process_events(struct spdk_ioat_chan *ioat) {

return ioat_process_channel_events(ioat);

}

static int ioat_process_channel_events(struct spdk_ioat_chan *ioat) {

uint64_t status = *ioat->comp_update;

uint64_t completed_descriptor = status & SPDK_IOAT_CHANSTS_COMPLETED_DESCRIPTOR_MASK;

if (completed_descriptor == ioat->last_seen) {

return 0;

}

do {

uint32_t tail = ioat_get_ring_index(ioat, ioat->tail);

struct ioat_descriptor *desc = &ioat->ring[tail];

// Here, the given callback function is called.

if (desc->callback_fn) {

desc->callback_fn(desc->callback_arg);

}

hw_desc_phys_addr = desc->phys_addr; // This breaks the loop

ioat->tail++;

events_count++;

} while(hw_desc_phys_addr != completed_descriptor);

ioat->last_seen = hw_desc_phys_addr;

return events_count;

}

so that you should call spdk_ioat_process_events() to get notified.